|

I am an imaging architect and senior staff research scientist at Meta, working in Ajit Ninan's Imaging Experiences Architecture team in Reality Labs. I previously worked in the Core Display Incubation team at Apple, the Applied Vision Science team at Dolby Laboratories, and the Stereo and Displays group at Disney Research Zurich. I did my PhD in Markus Gross' Computer Graphics Laboratory at ETH Zurich. I got my master's in applied math at IMPA/Visgraf, and my bachelors in math at the Federal University of Juiz de Fora. |

|

|

I am interested in perception and computer graphics, especially anything involving computational display and psychophysics. Prior work involved perceptual metrics, brightness and color, stereo 3D, and display topics like virtual and augmented reality, frame rate, high dynamic range and more. |

|

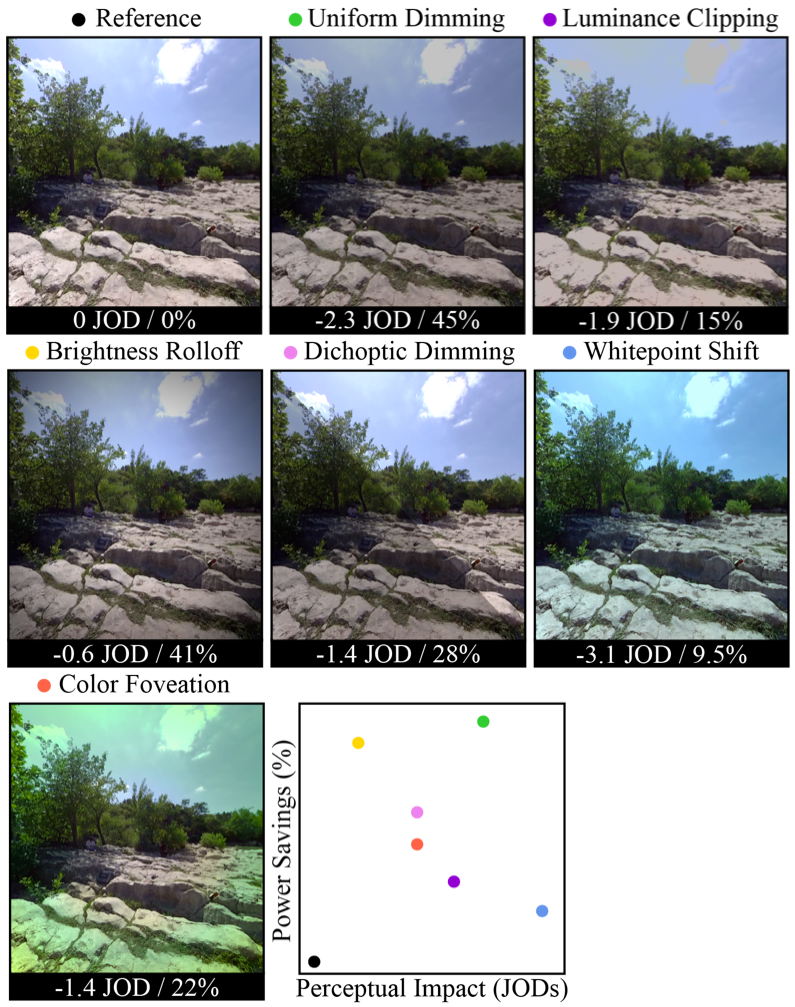

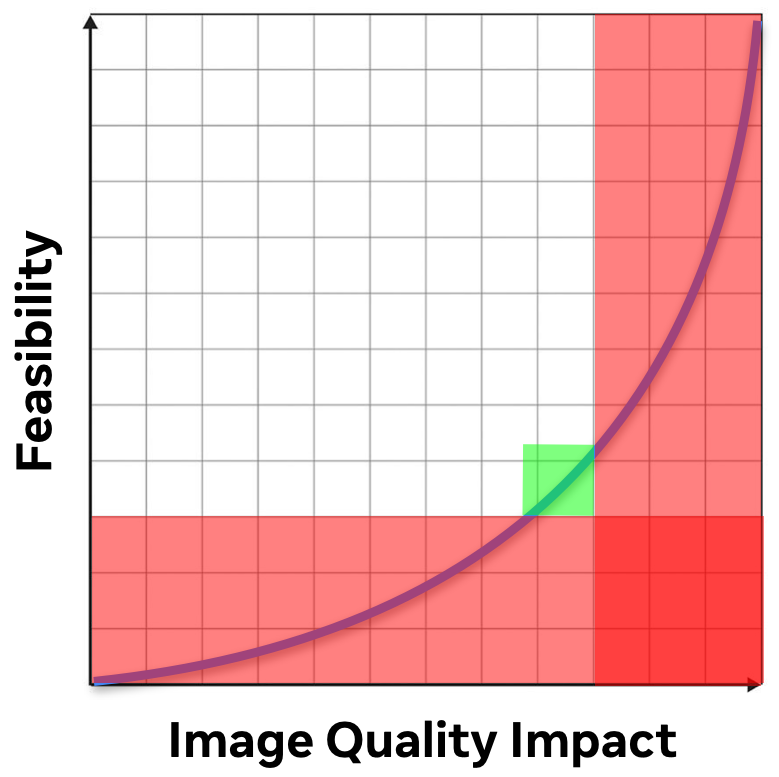

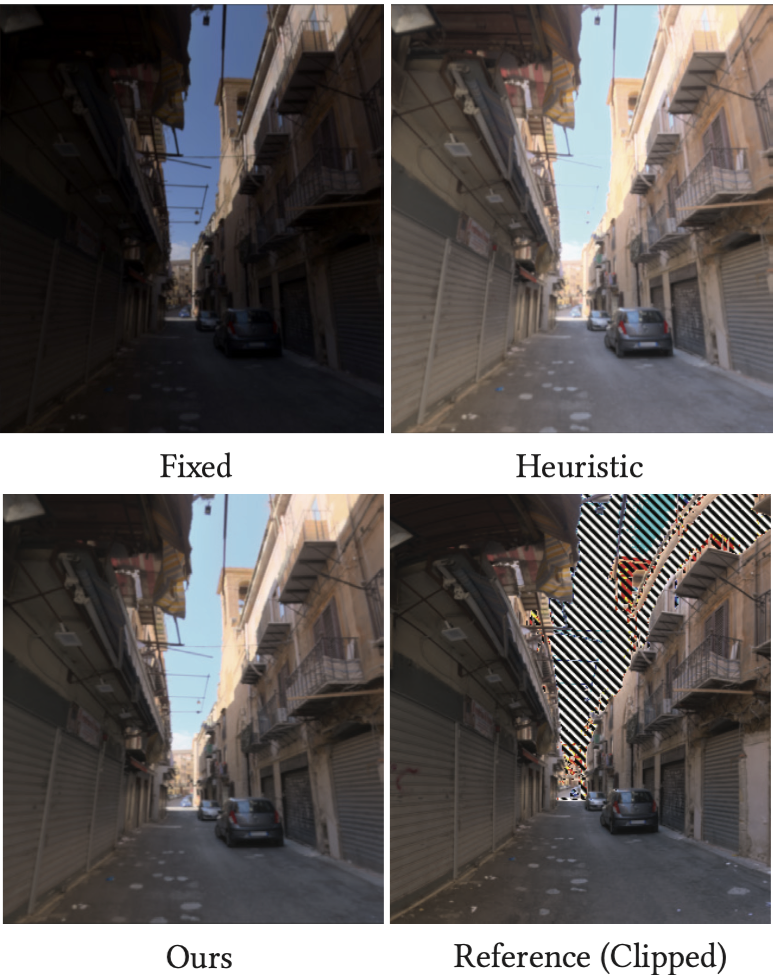

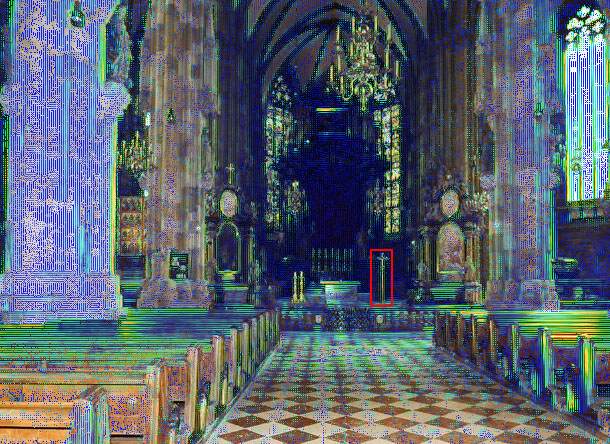

In our SIGGRAPH'24 "PEA-PODS" paper we explored several image modification algorithms to reduce display power consumption while incurring the least perceived error from the original. In this work ("ML-PEA"), a neural net predicts spatially-varying dimming maps for display power optimization, yielding improved performance over leagacy methods in some conditions. |

|

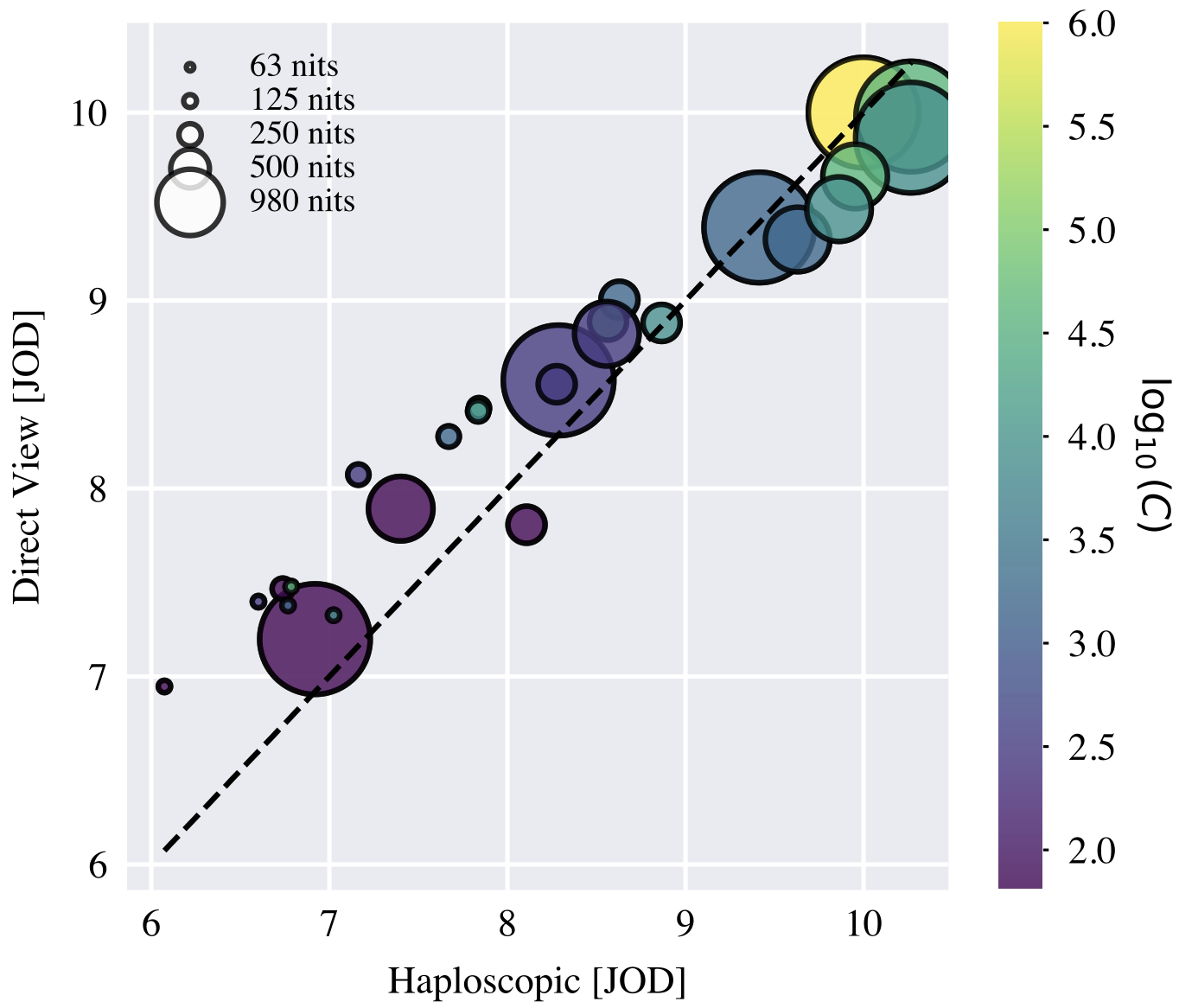

In our "What is HDR?" work in SIGGRAPH 2025, we performed perceptual measurements for preference on HDR displays across luminance and contrast values in VR. In this work, we extended the "What is HDR?" data to direct-view displays, confirming that the results hold well for both traditional and headmounted displays. |

|

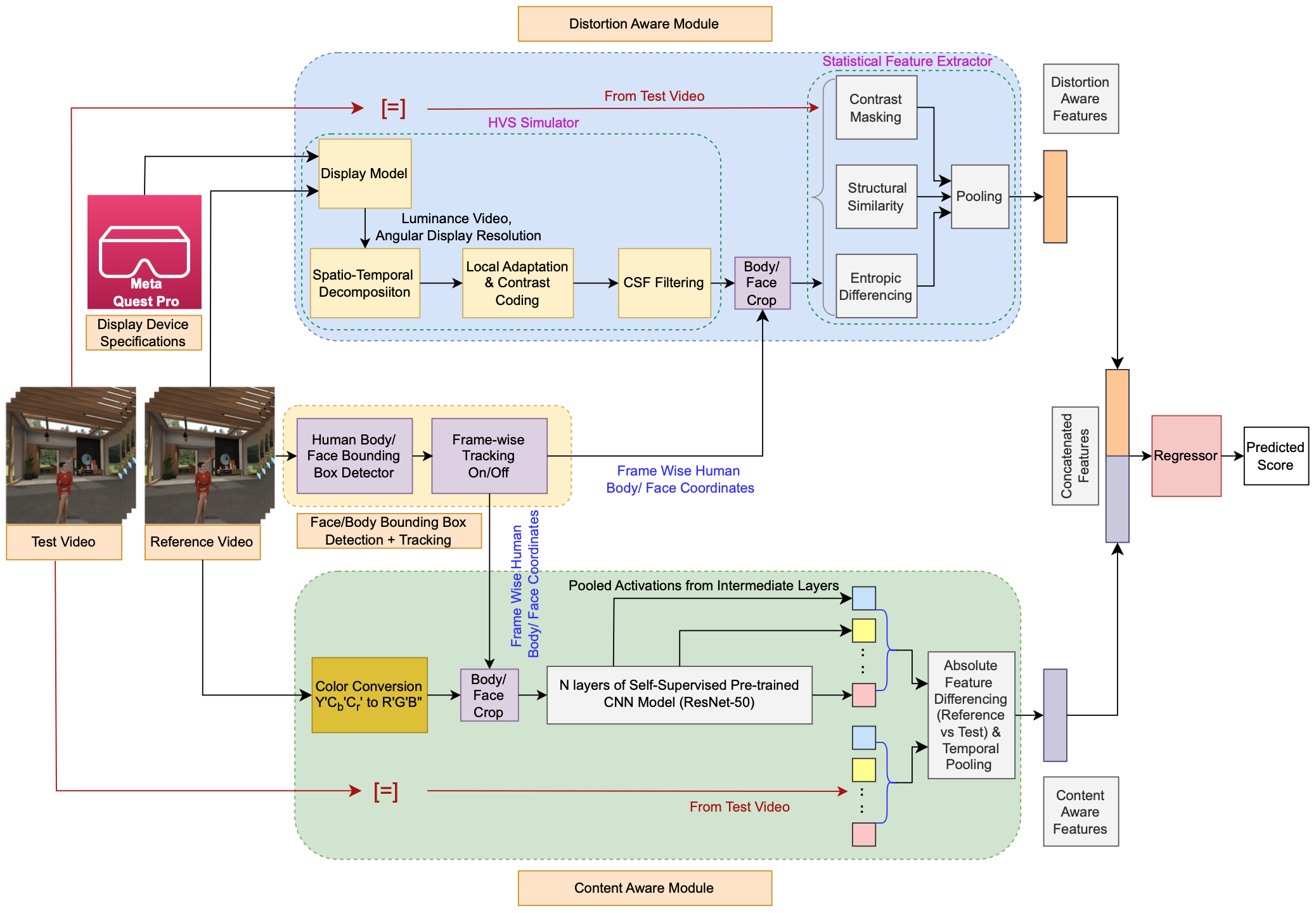

We created a full reference video quality assessment (VQA) model for human avatars in virtual reality. Standard VQA models often underperform for avatars because they are not accounting for distortions unique to avatar rendering. HoloQA uses a mixture-of-experts model to address this, combining low- and high-level features in its assessment. |

|

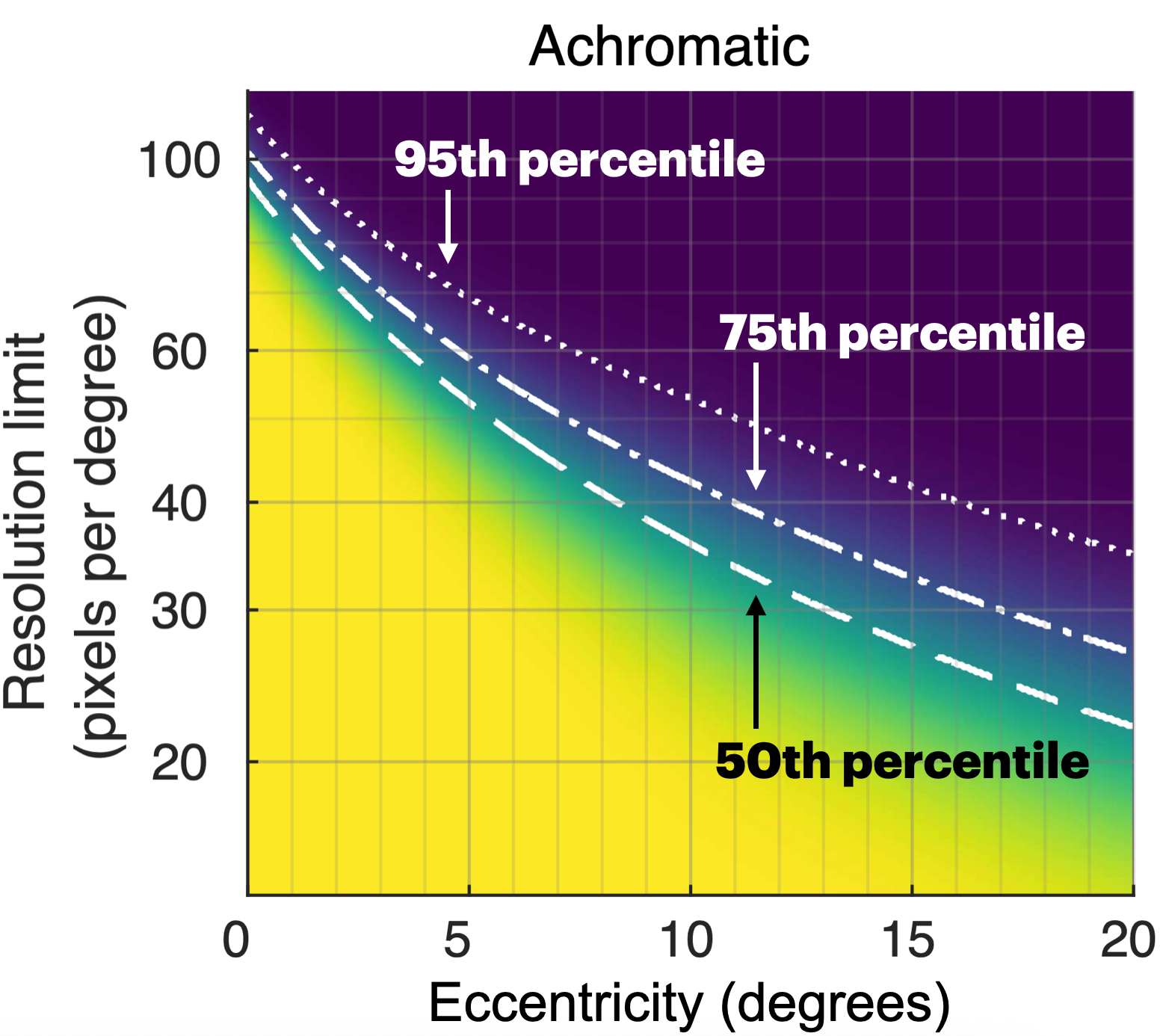

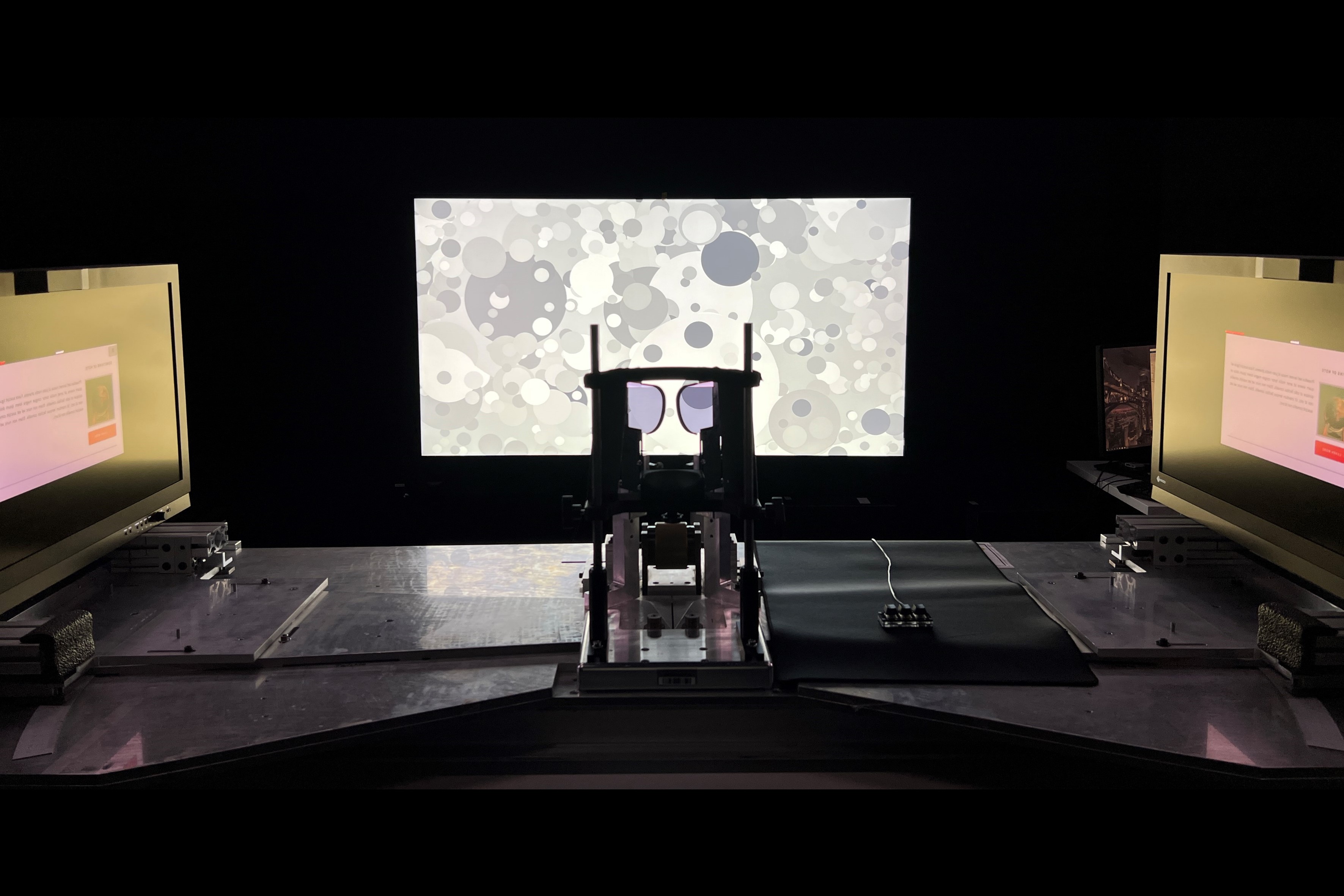

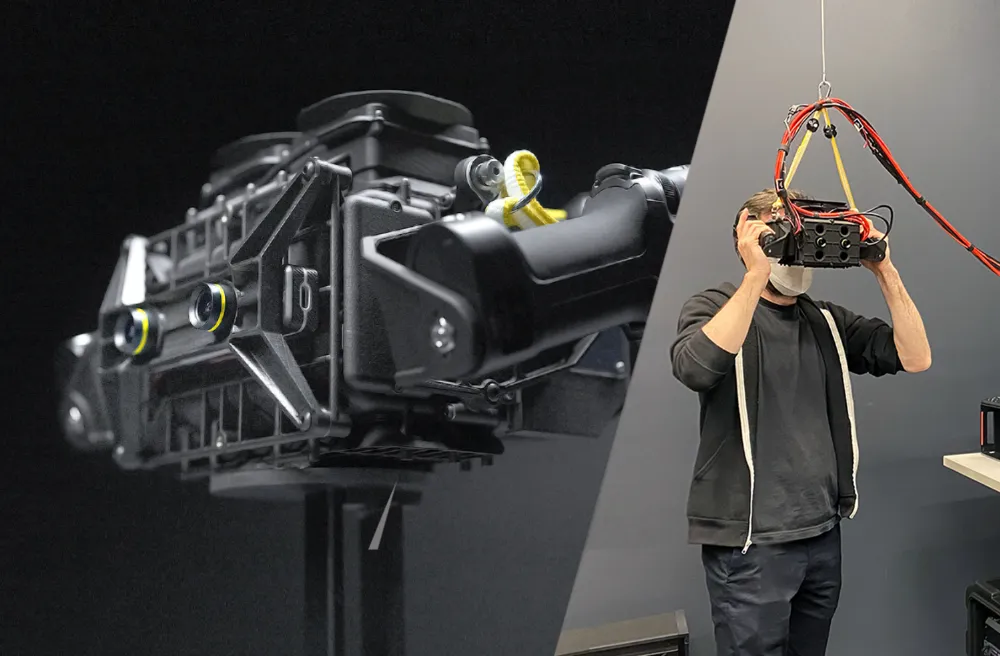

We investigated the "retinal resolution" of a display, where adding more would not make a difference. To do this, we built a custom display rig that allowed us to change effective resolution continuously. We collected data for foveal and peripheral, achromatic and chromatic stimuli. Our results set the north star for display development, with implications for future imaging, rendering and video coding technologies. This work received widespread media attention: over 100 outlets, including The Guardian, The Daily Mail, Cambridge Research News, Popular Science, The Debrief, Boing Boing, UploadVR, NPR. |

|

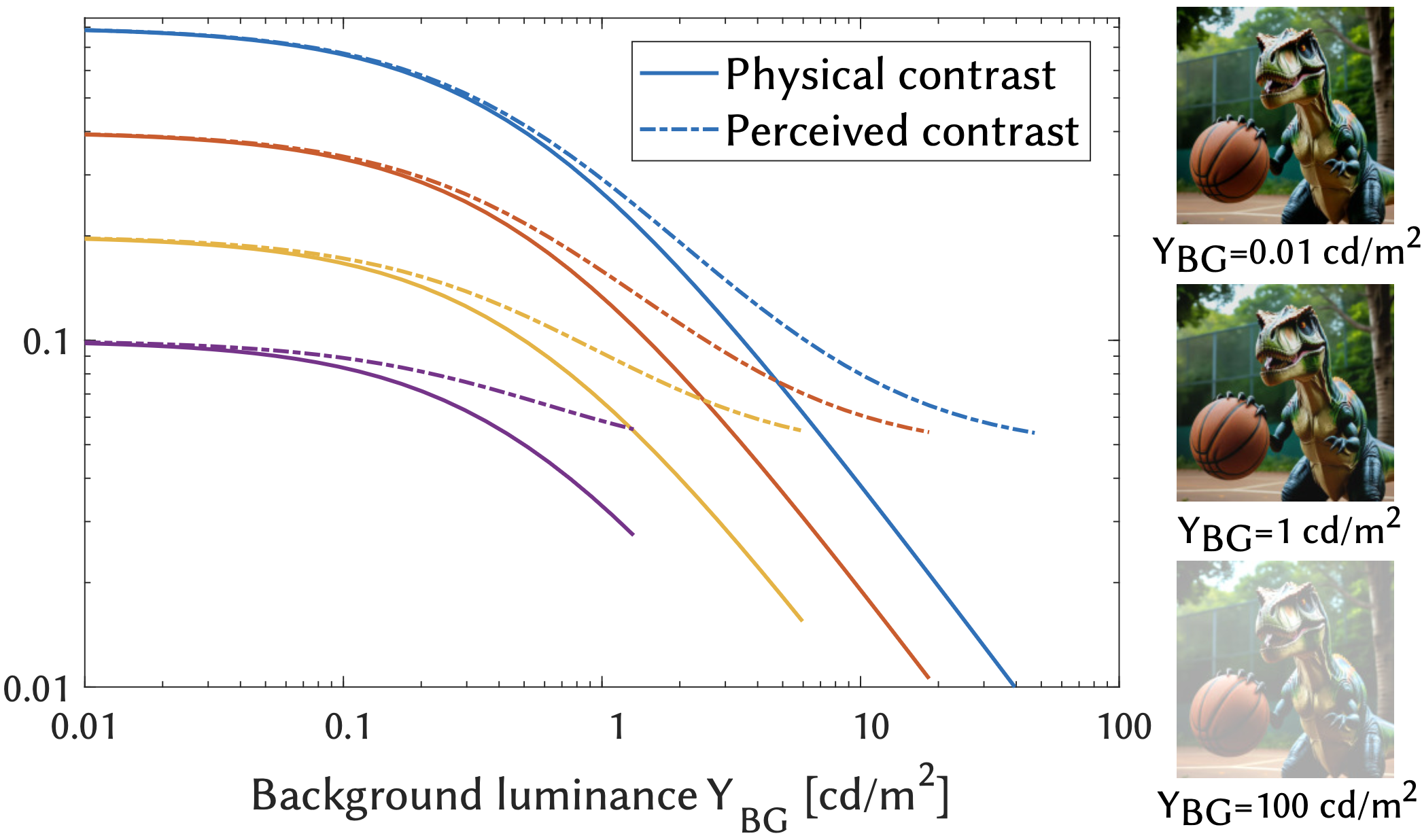

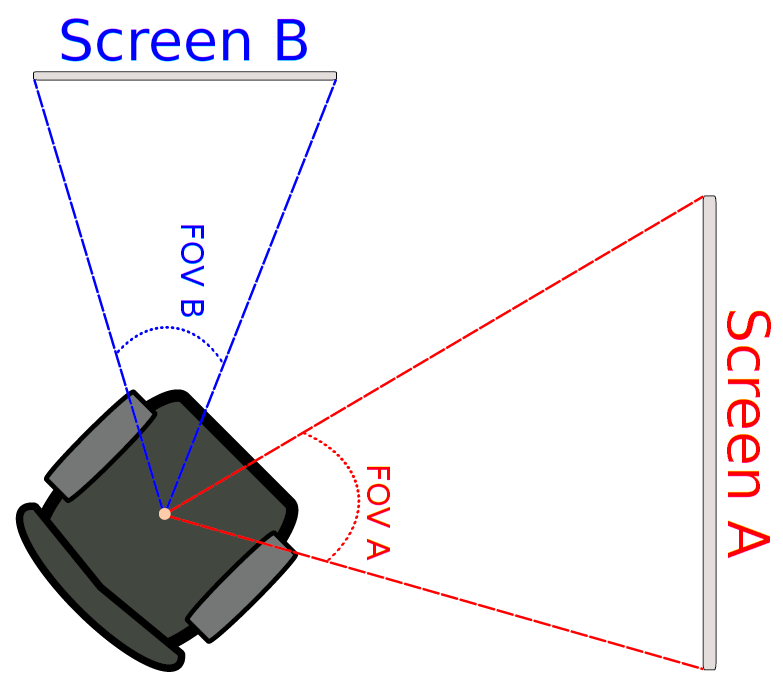

We studied the perception of contrast in Augmented Reality. In particular, we were interested in understanding and modeling how AR image visibility in the presence of an additive background differs from traditional display. We found that existing supra-threshold contrast sensitivity models do a good job of explaining the effect, influenced by threshold elevation with added background luminance. |

|

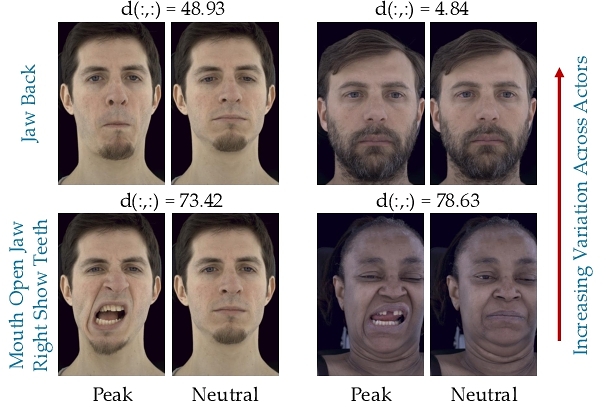

We ran a large-scale user study to quantify the perceived differences between facial expressions. Our dataset covers a diverse range of expressions, and measures both inter and intra-expression differences on multiple actors. |

|

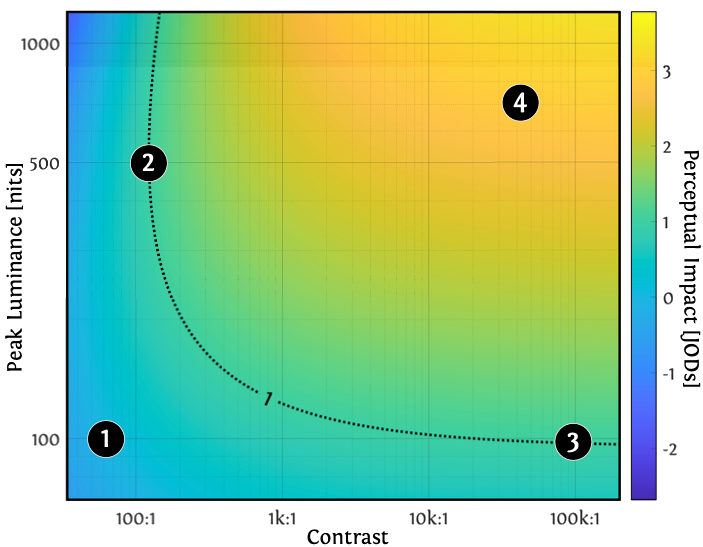

We investigated how the contrast and peak luminance of a display impact user preferences. Our precise large-scale study procedure allowed us to model the results in terms of absolute just-objectionable-difference (JOD) units. This data lets us to predict display quality, make decisions on design tradeoffs, and more. |

|

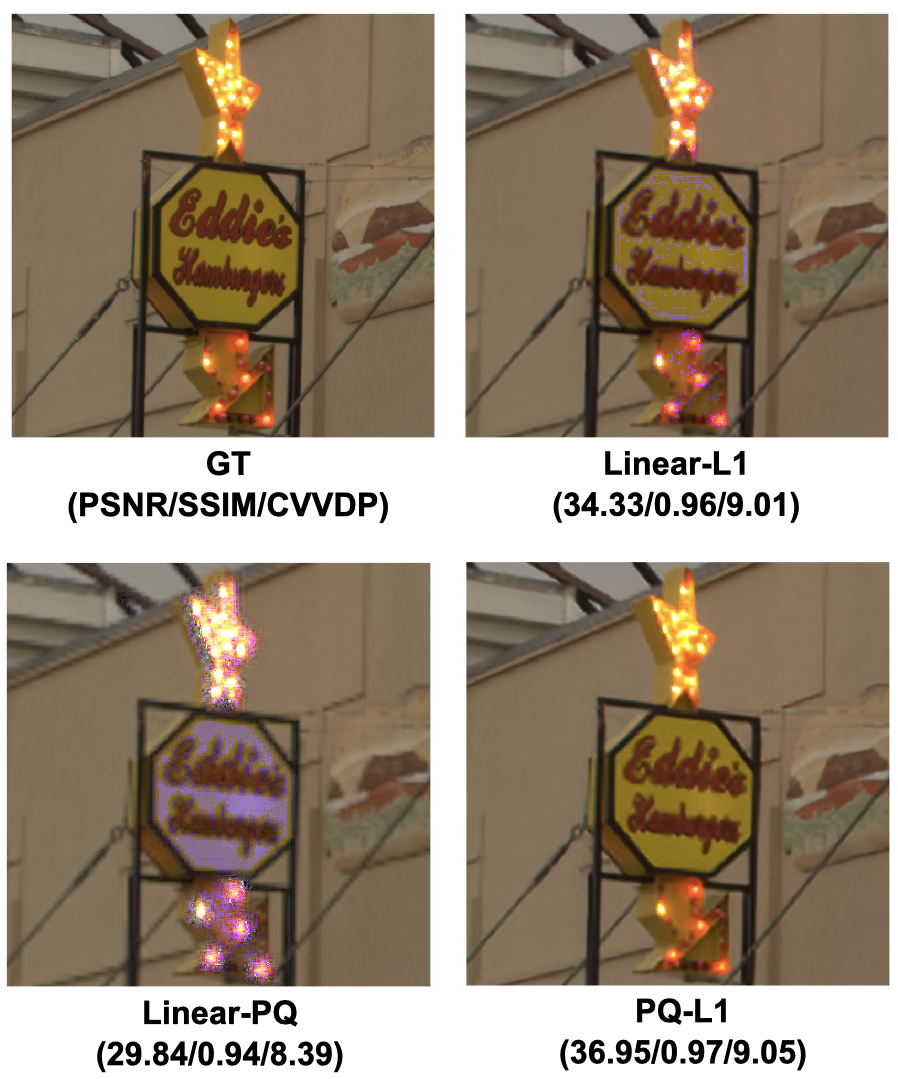

Most content online is in a display-encoded color space (scaled 0-1). This is different from HDR/RAW content, typically scaled linearly in proportion to physical luminance. As a result, popular loss functions produce worse results for HDR/RAW, and there's no consensus on how to do better. We demonstrate that a 1-line change (applying a standard transfer function) can improve network performance for HDR/RAW image restoration tasks by 2-9 dB. |

|

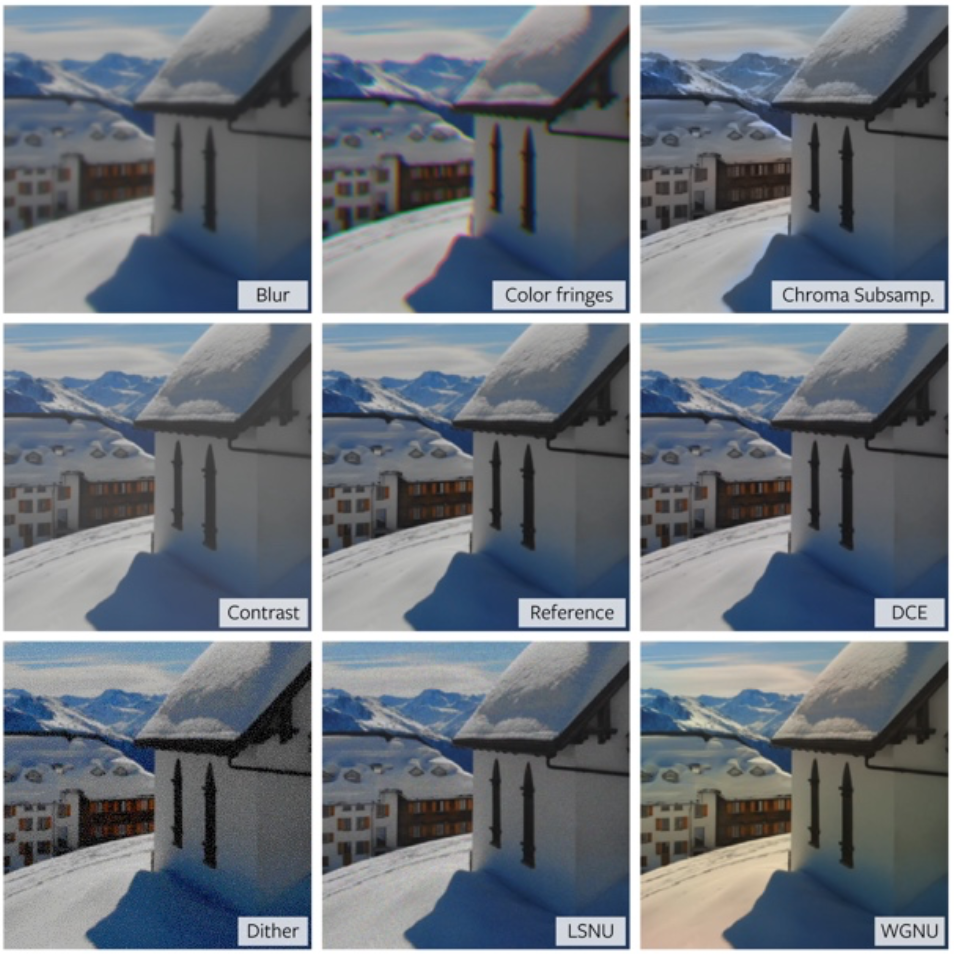

The perception of distortions in augmented reality (AR) is very different than on traditional displays because of the presence of very bright see-through ambient conditions. We quantified this effect by conducting the first large-scale user study on the visibility of display artifacts in an AR setting. These results can help researchers understand visibility in AR and develop computational methods for this new, exciting platform. |

|

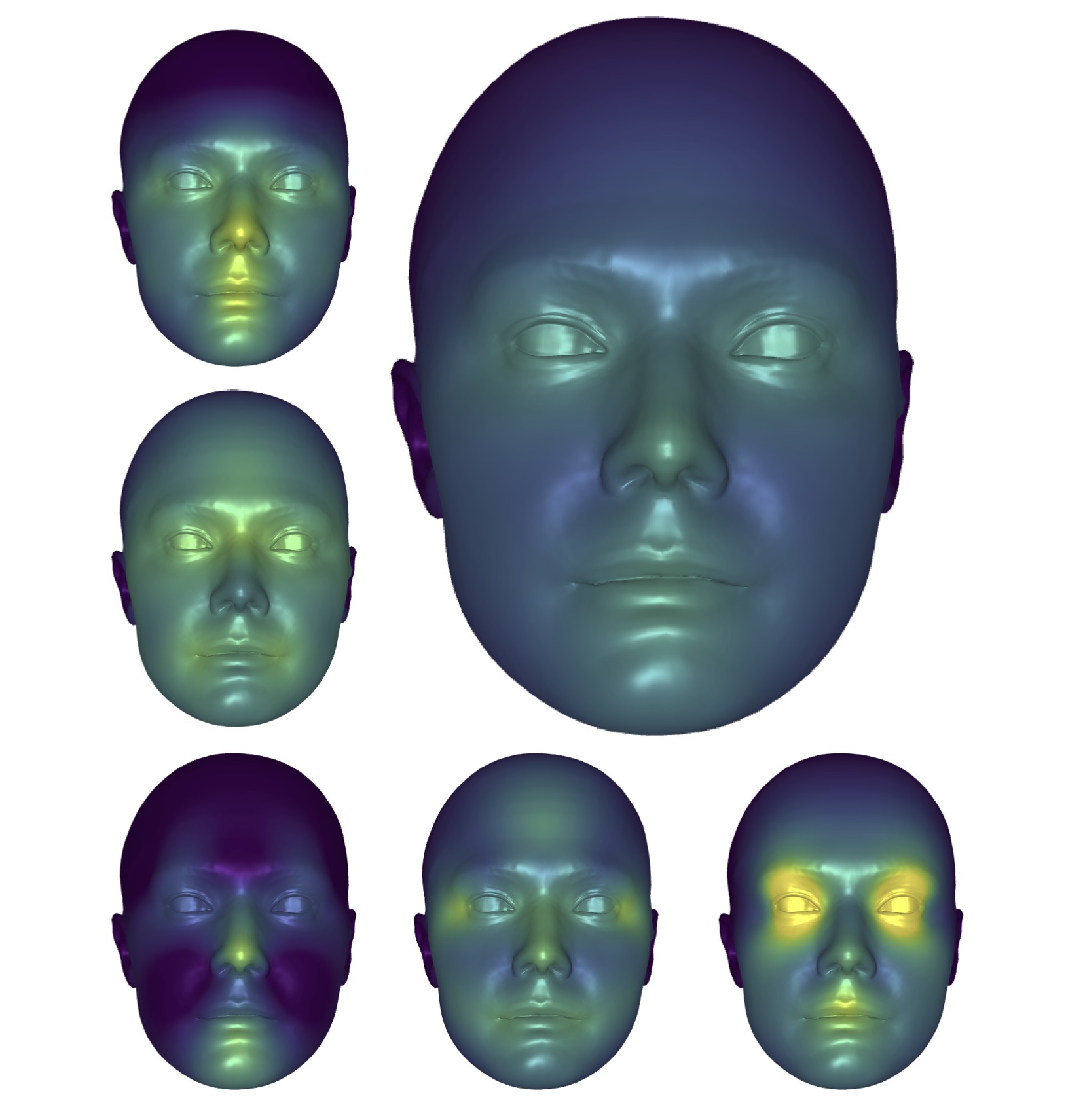

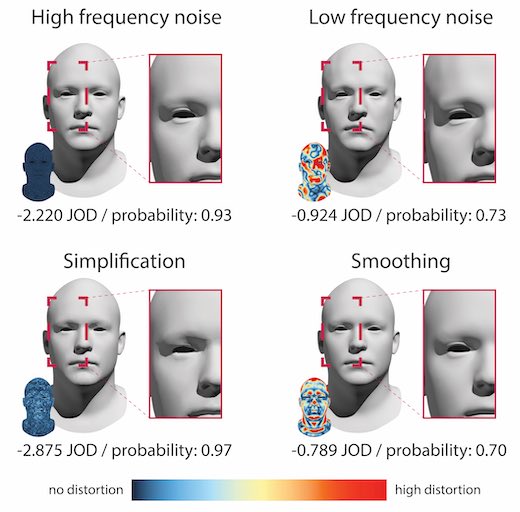

We created a saliency map for the human face, based on how visible perceptual distortions are per region. Our results are intuitive (e.g. texture distortions are especially visible on the eyes, and geometry distortions on the nose), and also provide a robust quantifiable prior that can be integrated into graphics pipelines for optimal resource distribution when rendering human faces. We demonstrate this via applications, like saliency-driven 3D Gaussian Splatting avatars. |

|

We evaluated the perceived impact of several graphics distortions relevant for video streaming on full body human avatars. Creating large-scale datasets such as these for human avatars is important, because the perception of artifacts on human characters is different from other types of objects. |

|

|

In this work we explored several perceptual algorithms (PEAs) for power optimization of displays (PODs). Different methods may introduce alterations of different types, and save more or less power depending on the display technology. We compare like-to-like in a user study, obtaining interpretable results for perceptually subtle breakpoints on how much display power can be saved across a wide range of technologies: LCD, OLED, with or without eye tracking, and more. |

|

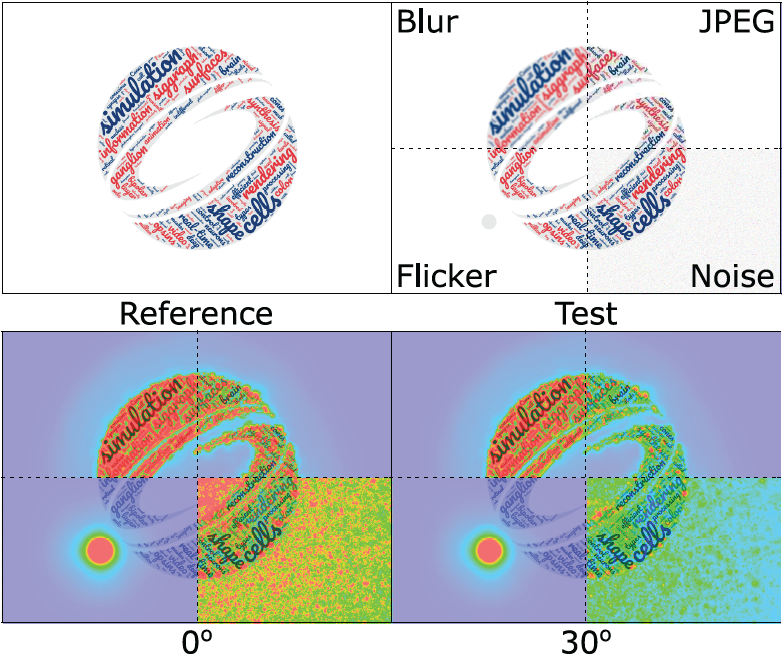

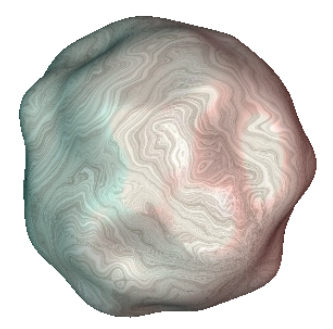

This paper continues our research into perceptual video difference metrics. ColorVideoVDP is the first fully spatio-temporal color-aware metric. It models display geometry and photometry, produces an output in interpretable just-objectionable-difference units, and runs fast. Code is available on GitHub, and extensive documentation and comparisons with other methods in the literature can be seen on the project page. |

|

This short paper and presentation given at SID Display Week 2024 in San Jose gives a quick overview of different aspects of image/video difference metrics, and some of the philosophy behind our work on VDP metrics, in particular ColorVideoVDP. |

|

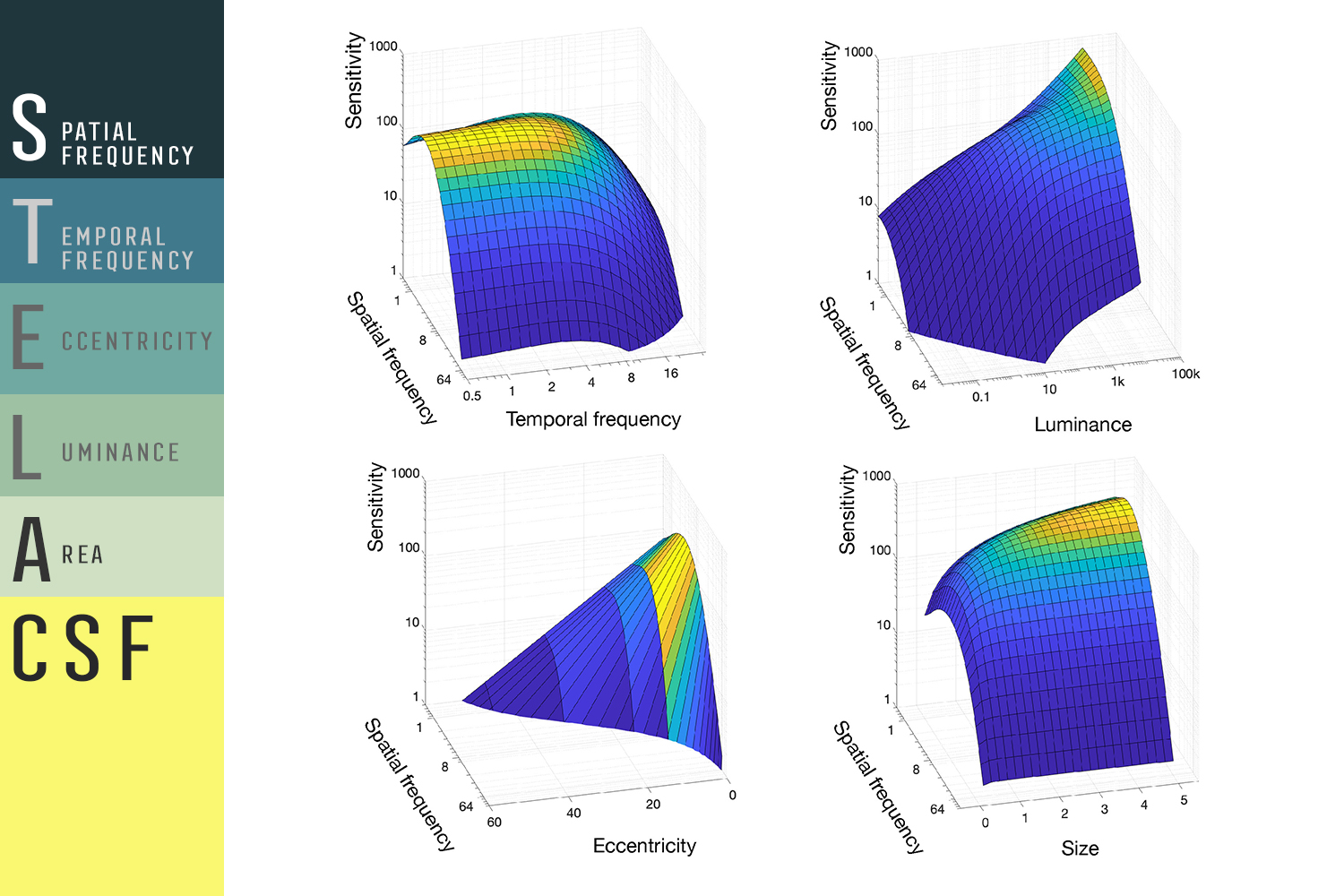

We followed up on our work unifying contrast sensitivity datasets from the literature by integrating color. This extends our data-driven CSF model to now cover color, area, spatiotemporal frequency, luminance and eccentricity. Our model performs better than any existing work, and importantly covers critical perceptual parameters necessary for display engineering and computer graphics tasks. Data, code, and additional information available on our project page. |

|

We created a perceptual framework that determines the optimal parameters for a tone mapper in real time (>1ms per frame on Quest2). We use this system and the Starburst HDR VR prototype to demonstrate that content shown with an optimized version of the Photographic TMO is preferred to heuristics or unoptimized versions even when display luminance is reduced tenfold. |

|

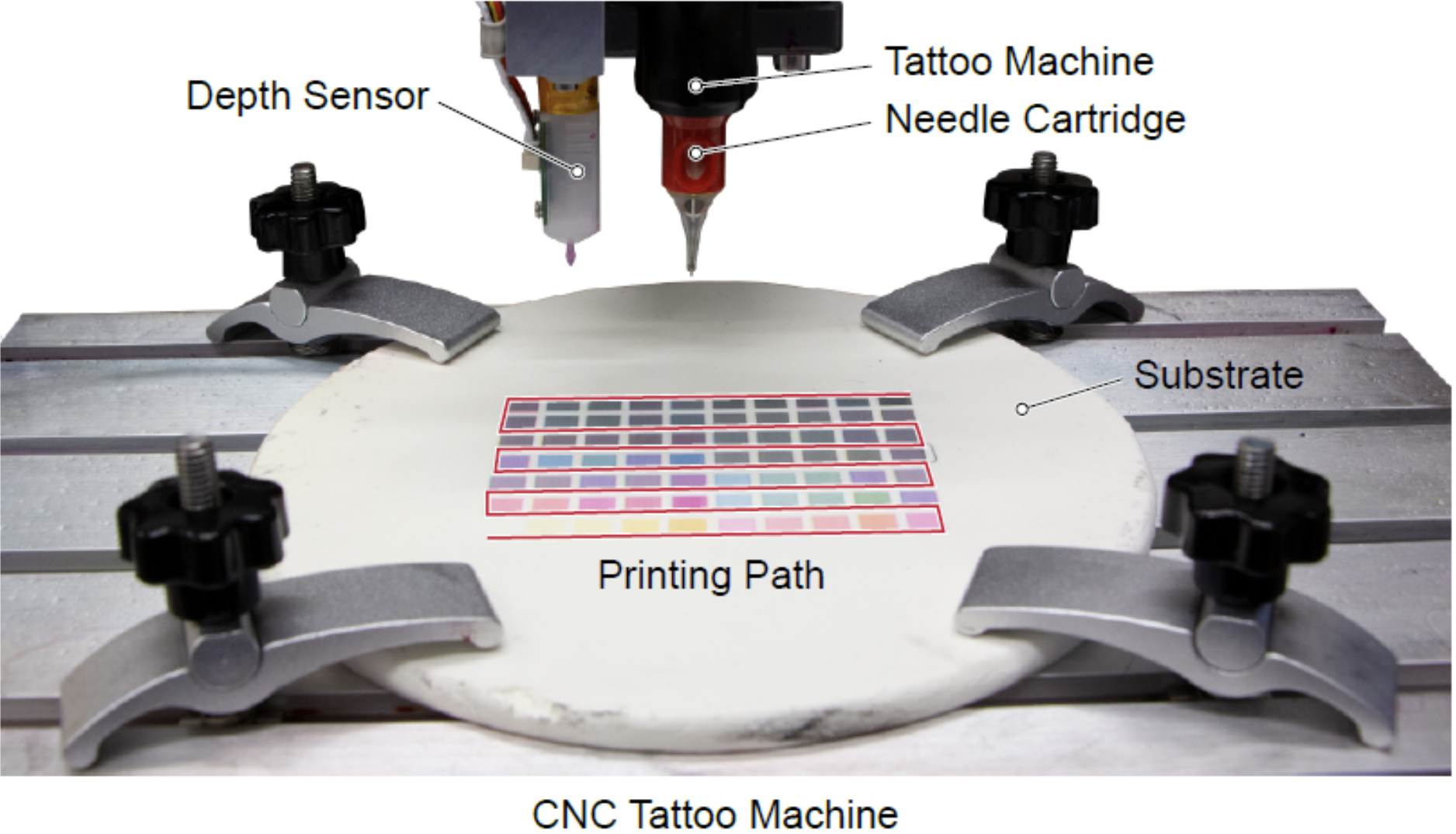

In this work, we examined tattoos through the lens of computational fabrication. To build our model, we created an automatic tattoo robot, and processes to generate synthetic skins to experiment on. We created a framework to predict and modify tattoo color for different skin tones, which we hope will lead to better tattoo quality for everyone. This work also has medical and robotics applications! |

|

Flicker is a common temporal artifact that is affected by many parameters like luminance and retinal eccentricity. We gathered a high-luminance dataset for flicker fusion thresholds, showing that the popular Ferry-Porter law does not generally hold above 1,000 nits, and the increase in sensitivity saturates. |

|

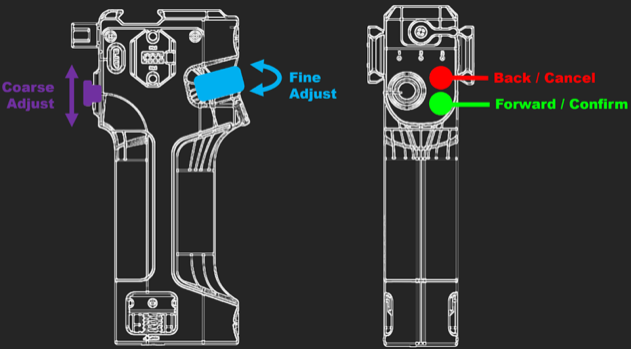

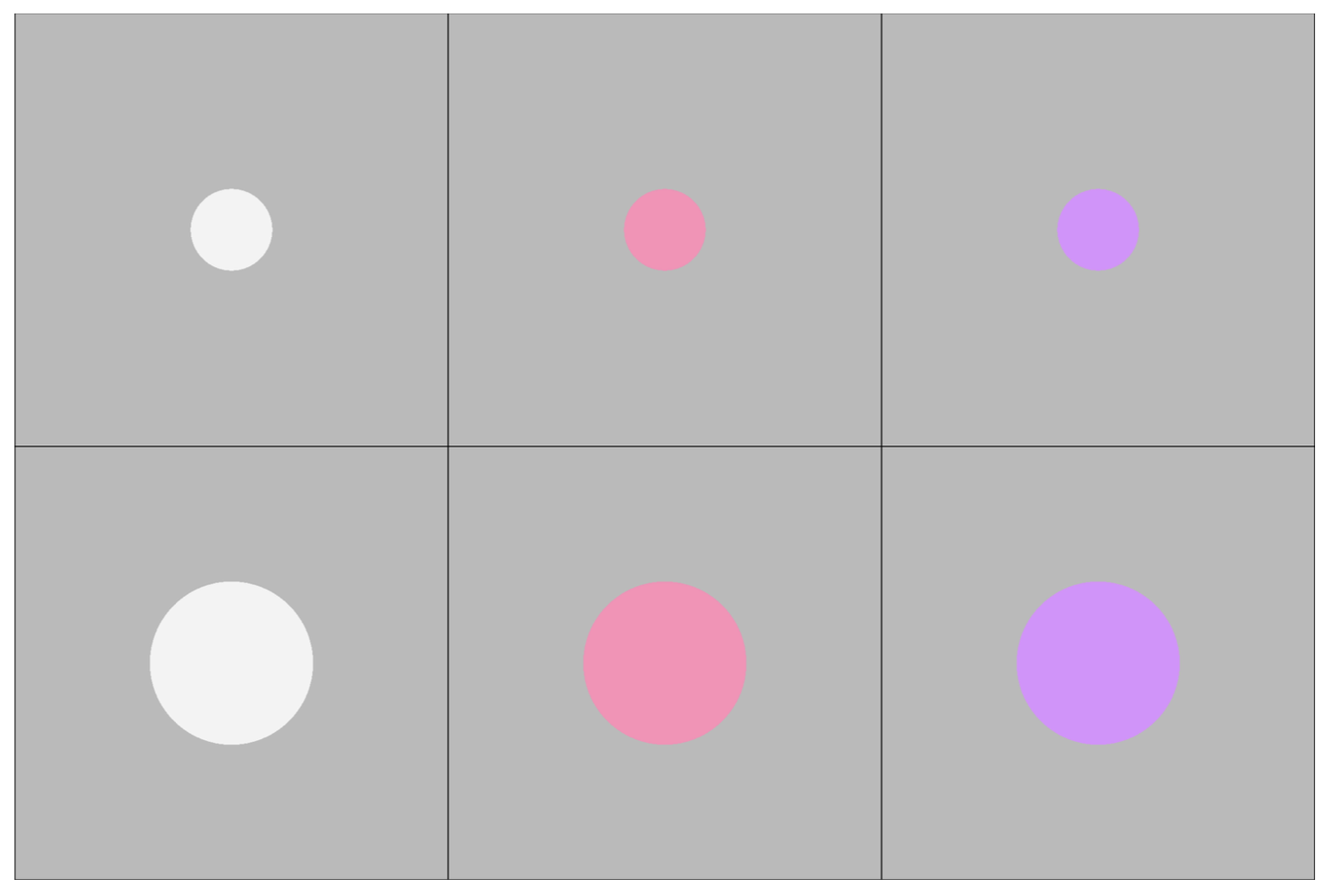

Studies on spatial and temporal sensitivity are often done using different types of stimuli. We studied how experiments conducted using discs can be predicted using data for Gabors. This can lead to more comprehensive models calibrated on both types of data. |

|

We study the perception of geometric distortions. We create a novel demographically-balanced dataset of human faces, and find the perceived magnitudes of several relevant distortions through a large-scale subjective study. |

|

We used the Starburst HDR VR 20,000+ nits prototype display to run a study measuring user preferences for realism when immersed in natural scenes. We found that user preference extends beyond what is available in VR today, and changes significantly between indoor and outdoor scenes. |

|

Our HDR VR prototype display can reach brightness values over 20,000 nits. This work won "best in show" in the Emerging Technologies section of SIGGRAPH'22, and has received widespread media attention: Adam Savage's Tested, CNET, UploadVR, DigitalTrends, TechRadar, Mashable, RoadToVR. |

|

We unified contrast sensitivity datasets from the literature, which allowed us to create the most comprehensive and precise CSF model to date. This new model can be used to improve many applications in visual computing, such as metrics. Data, code, and additional information available on our project page. |

|

We created a new foveated spatiotemporal metric, following the VDP line of work. This metric is fast, easy to use and has been carefully calibrated on several large datasets. |

|

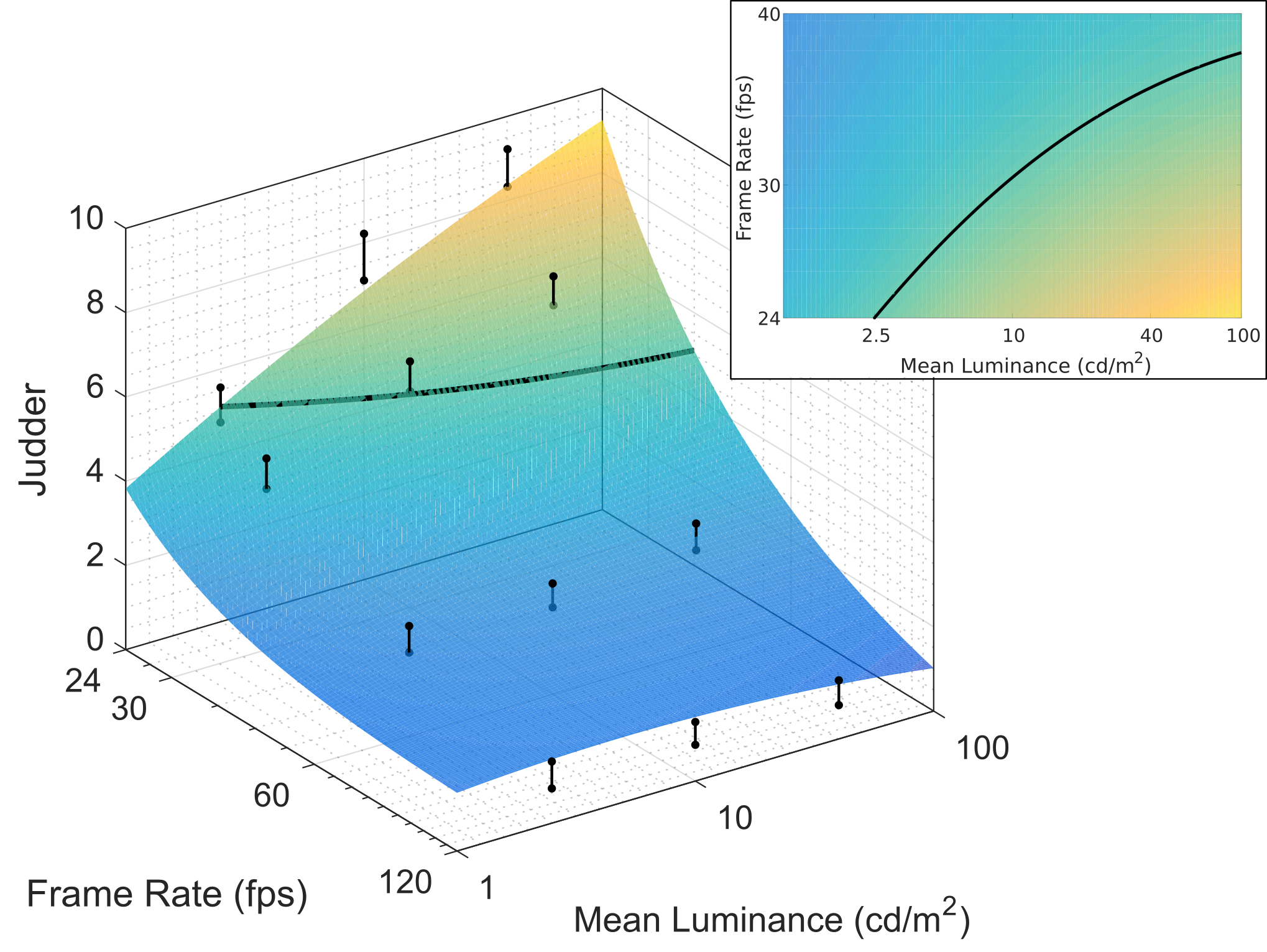

We studied the main perceptual components of judder, the perceptual artifact of non-smooth motion. In particular, adaptation luminance is a strong factor on judder that has changed significantly with modern generations of displays. Errata: In the table of coefficients in Sec. A3, the two-before-last coefficient should be ~0 instead of 1.01. The coefficient is correctly written out in the supplementary material, but not in the manuscript. |

|

Author's version available here, link to ACM TAP version above. We studied the influence of screen size and distance on perceived brightness for screens as large as cinema and as small as mobile phones, an issue that affects artistic intent and appearance matching. |

|

We created an immersive gaming system out of a legacy console. This work was presented at the Eurographics 2015 banquet, and the Ludicious game festival in Zurich. It also received wide media attention: arstechnica, engadget, 20minuten, konbini, xataca, gamedeveloper, factornews, boingboing, and has over 200,000 views on YouTube. |

|

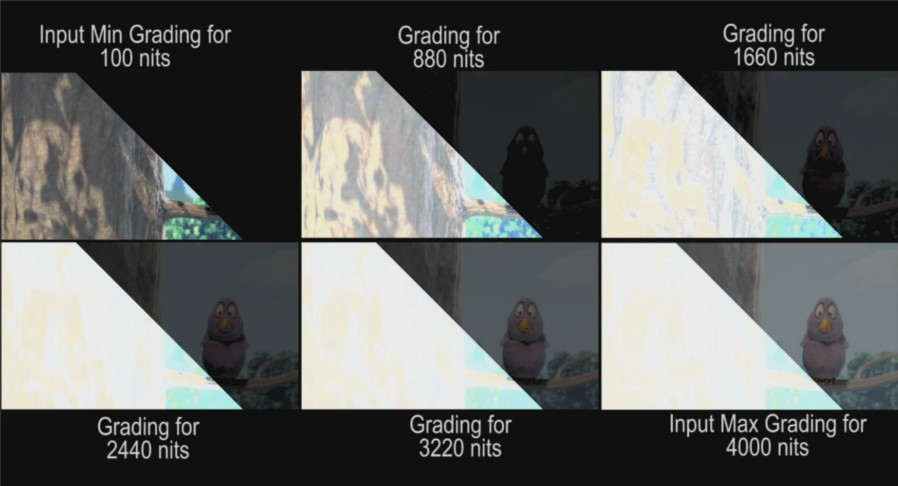

We defined the production and distribution challenges facing the content creation industry in the current HDR landscape and proposed Continuous Dynamic Range video as a solution. |

|

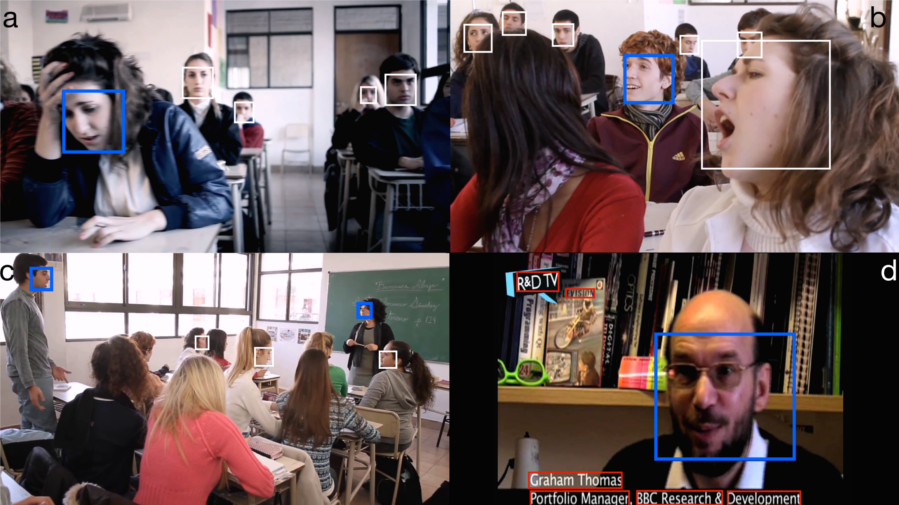

We developed a pipeline to segment and analyze video. Our technique could be applied for smarter editing. |

|

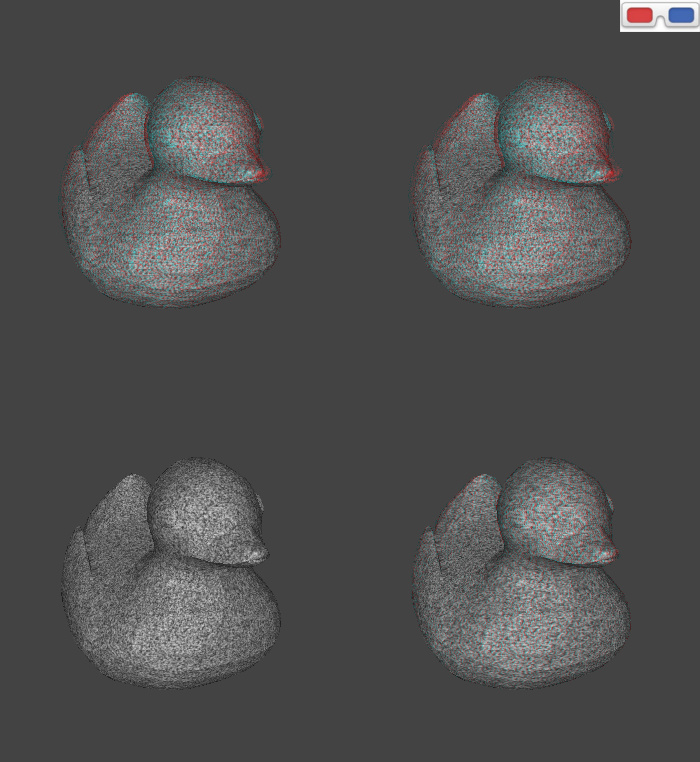

We use non-photorealistic shading as an alternative 3D cue, augmenting the feeling of depth in stereoscopic images. |

|

We conducted perceptual experiments to quantify cardboarding - an artifact that occurs when not enough depth is given to a stereoscopic 3D image region. |

|

We measured perceptual aspects of autostereo content and created a depth re-mapping algorithm that tries to optimize content so that the most important regions have a fuller sense of depth while staying within the limits of the technology. |

|

We created a capture and processing pipeline to generate HDR video on a mobile phone by taking sequential multiple exposures. |

|

We improved our edge detector by using eigenvalues. |

|

We detected high frequencies using an orientation tensor and multiresolution. |

Posters: |

|

|

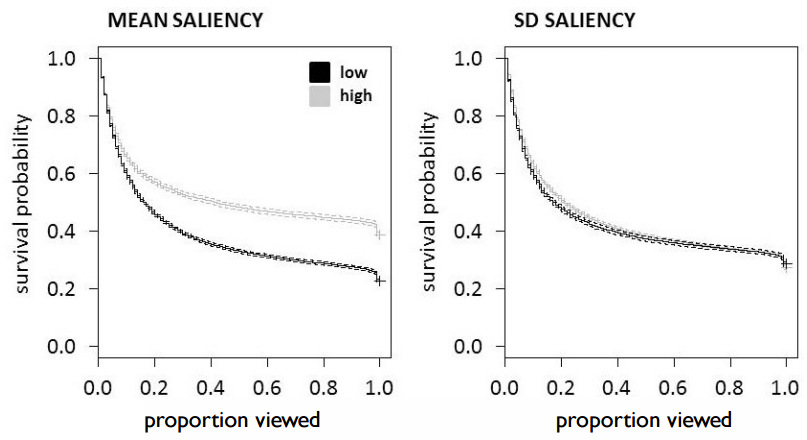

We found that visual saliency can be used as a predictor for video watching behavior in online platforms. |

|

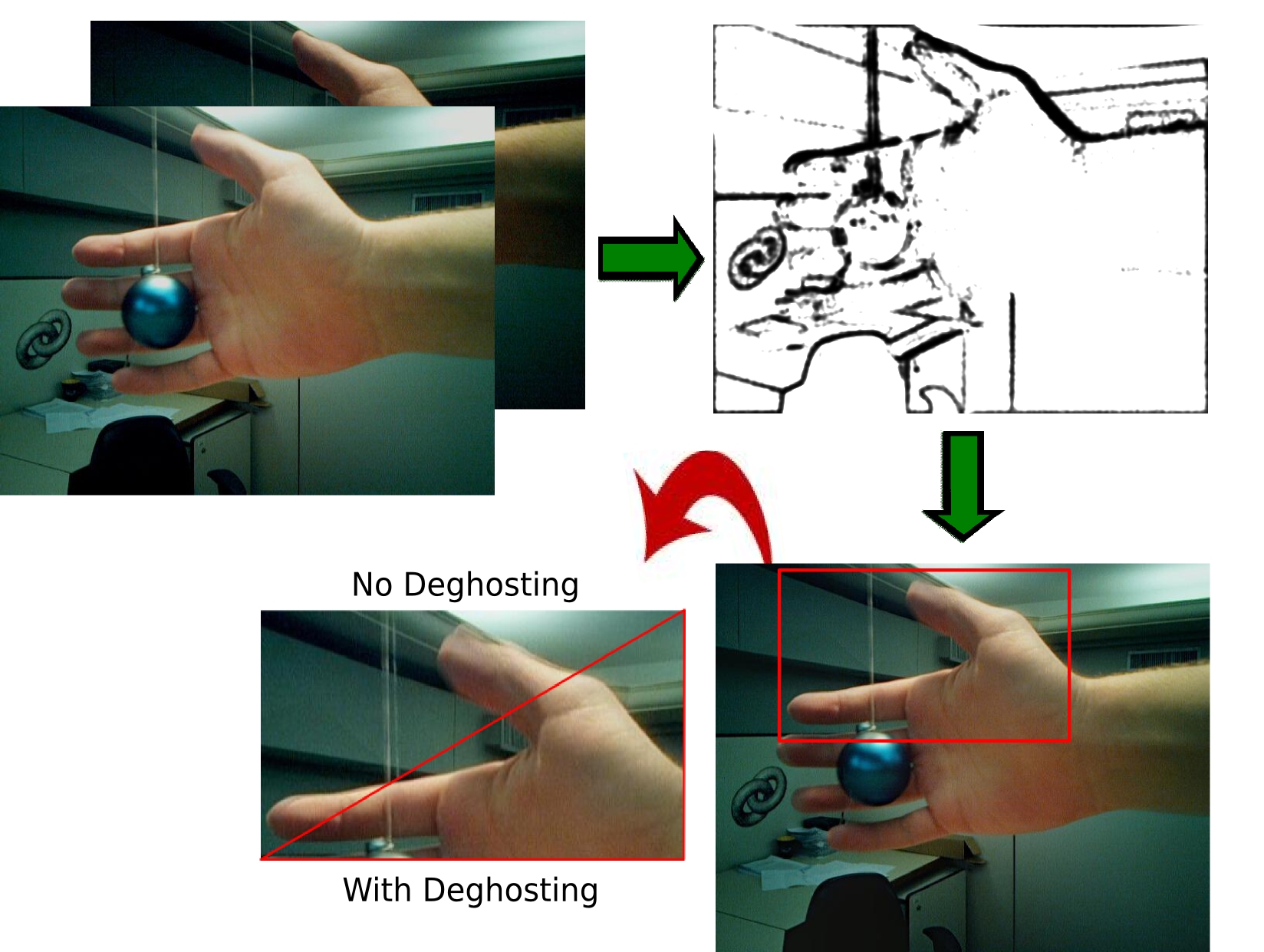

We used an aditional per-pixel parameter to avoid ghosting from motion when generating exposure-fusion videos on a mobile phone. (Student research competition semi-finalist) |

|

We created a capture and processing pipeline to generate HDR video on a mobile phone by taking sequential multiple exposures. |

| Additional publications available upon request. |

|

Website template taken from here |